When I think about what data I’d like to see IT operations departments measure and report, there are two key things that I think of: How happy are the users of the IT service and how much disruption are IT failures causing to business users? I recently had the pleasure of seeing a demo of the latest version of HappySignals IT metrics and benchmarking tool, and I was impressed because it provides both these metrics in a way that I found very helpful for IT service management (ITSM).

Measuring user satisfaction

Many organizations measure user satisfaction when they close incidents. And, to ensure you get the best possible feedback there’s some really important things to do:

- Make it very easy for your customers to give you feedback. A single button click should be all it takes to respond to “How happy are you with the service you received.”

- Provide a mechanism so that customers can, optionally, tell you why they gave you this score. Ideally allow them to answer with a general category, and to use their own words if they want to.

- Do be careful not to ask users for too much information. If I’m asked to fill out a survey that takes more than a few seconds then I often give up before submitting my response. If lots of your users do this then your response rate will be very low and you’ll end up with very poor quality data.

- Publish the feedback you get, and show how you are using this to improve. People are much more likely to offer you good feedback if they see that you’re listening. If you’re not going to use the data to help you plan continual improvement, then there’s little point in collecting it.

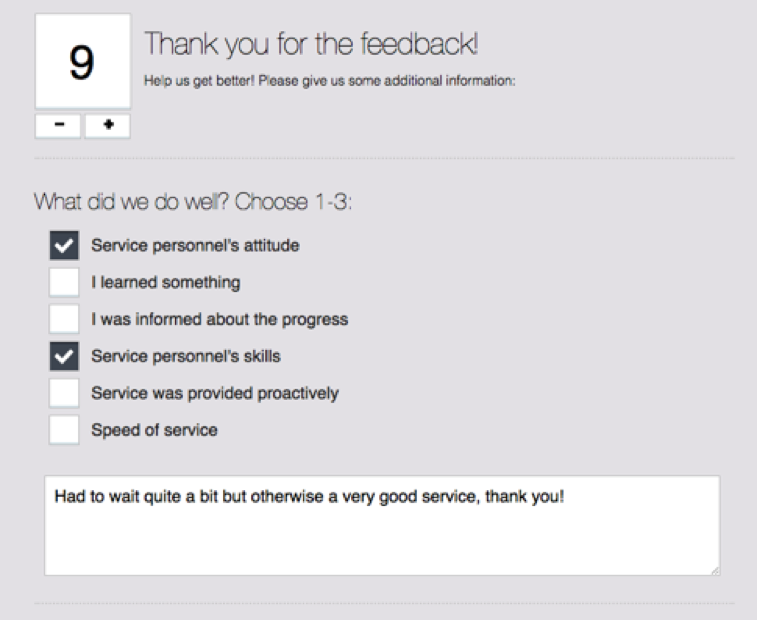

As you can see from the screenshot below, the HappySignals tool allows users to provide feedback with a single click, and the satisfaction rating is combined with incident closure. This increases the likelihood that users will provide a satisfaction rating which improves the quality of the data.

Once users have given a satisfaction rating the tool invites users to offer reasons for this, either by clicking on a general category, or in their own words. The form matches the wording of this invitation to the feedback score. In the example below, the form asks “What did we do well?” to match a feedback score of 9 out of 10.

What makes this tool particularly useful is that it includes benchmark data from hundreds of thousands of users from many different organizations, so that you can compare your own performance with that of other IT service providers.

Measuring business disruption (and the HappySignals approach)

Very few organizations that I’ve worked with collect data on the actual business impact of incidents. They may measure the duration of the incident by comparing the time that an incident is logged with the time it’s closed, but this doesn’t give a good measurement of the user impact. This makes it difficult to prioritize when you are planning improvements to IT services, or to demonstrate the impact that improvements have created. If you don’t already collect this type of data, it’s well worth considering.

I really like the approach used by HappySignals to collect data that measures business impact. It simply asks people to estimate how much working time they lost to an incident.

Data of this type can provide very useful insight into the impact different types of incident have on a person’s ability to get on with their job. The data is associated with the specific incident ticket, and this makes it easy to analyze by service, or geography, or user role, or any other category that you store with your incidents. Once you have a good grasp of the impact of specific types of incidents you’re much more able to plan relevant and effective service improvements.

The HappySignals benchmarking data shows that average lost working time for almost 100,000 incidents is 2 hours 48 minutes. I was surprised that this is so low. How does it compare to your users’ experience?

What else should an IT department know about metrics?

Of course, while measures of user satisfaction and business disruption are invaluable, they will not, on their own, enable you to make the best possible use of measurement in planning and delivering excellent IT services. If you wish to explore this topic in more depth – and I hope that you do – I have written several blogs about IT service management metrics which you might find useful as starting points. They include:

- Your Metrics are Not Your Goals, which explains the importance of understanding how metrics affect behaviour.

- Defining Metrics for a Help Desk, which suggests specific objectives and KPIs that might help to measure the effectiveness of a help desk.

- How You Can and Should Manage Availability of IT Services, which discusses the importance of understanding how IT services support business processes and what is the impact of availability failure over different time periods.

You can find these, and other similar blogs, by searching Stuart Rance blogs for ‘metrics’.

Conclusion and the need for HappySignals or similar

How well does your organization collect user satisfaction data? Do your users willingly offer you feedback because they know that you’re going to act on it, or do you have a user survey with very low response rates that’s only used for reporting how well you did?

What about business disruption? Do you know which of your services leads to the most lost working time for your users? Do you publish this data, along with trends to help your users see the impact of the improvements you’re making?

If you don’t collect good quality user feedback when you close incidents, then maybe it’s time to look at how easy it is for your users to give you the feedback you need to run, and improve, your business. For example, the HappySignals data.

Stuart Rance

Stuart Rance is a consultant, trainer, and author with an international reputation as an expert in ITSM and information security. He was a lead architect and lead editor for ITIL 4, and the lead author for RESILIA™: Cyber Resilience Best Practice. He writes blogs and white papers for many organizations, including his own website.

Stuart is a lead examiner for ITIL, chief examiner for RESILIA, and an instructor for ITIL, CISSP, and many other topics. He develops and delivers custom training courses, and delivers presentations on many topics, for events such as itSMF conferences and for private organizations.

In addition to his day job, he is also an ITSM.tools Associate Consultant.

One Response

The IT should measure only one metric – Is the customer happy?